Unlocking LLM Potential: The Critical Role of Data Quality and Governance

In the rapidly evolving landscape of artificial intelligence, Large Language Models (LLMs) have captured significant attention for their transformative capabilities. Yet, beneath the impressive outputs and advanced algorithms lies a fundamental truth that is often overlooked: the success of any LLM hinges entirely on the quality of the data it is trained on. Without a robust foundation of high-quality data, even the most sophisticated models can yield unreliable or biased results.

Let’s delve into the essential role data plays in LLM training and why effective data governance is not just beneficial but absolutely critical for achieving meaningful AI outcomes.

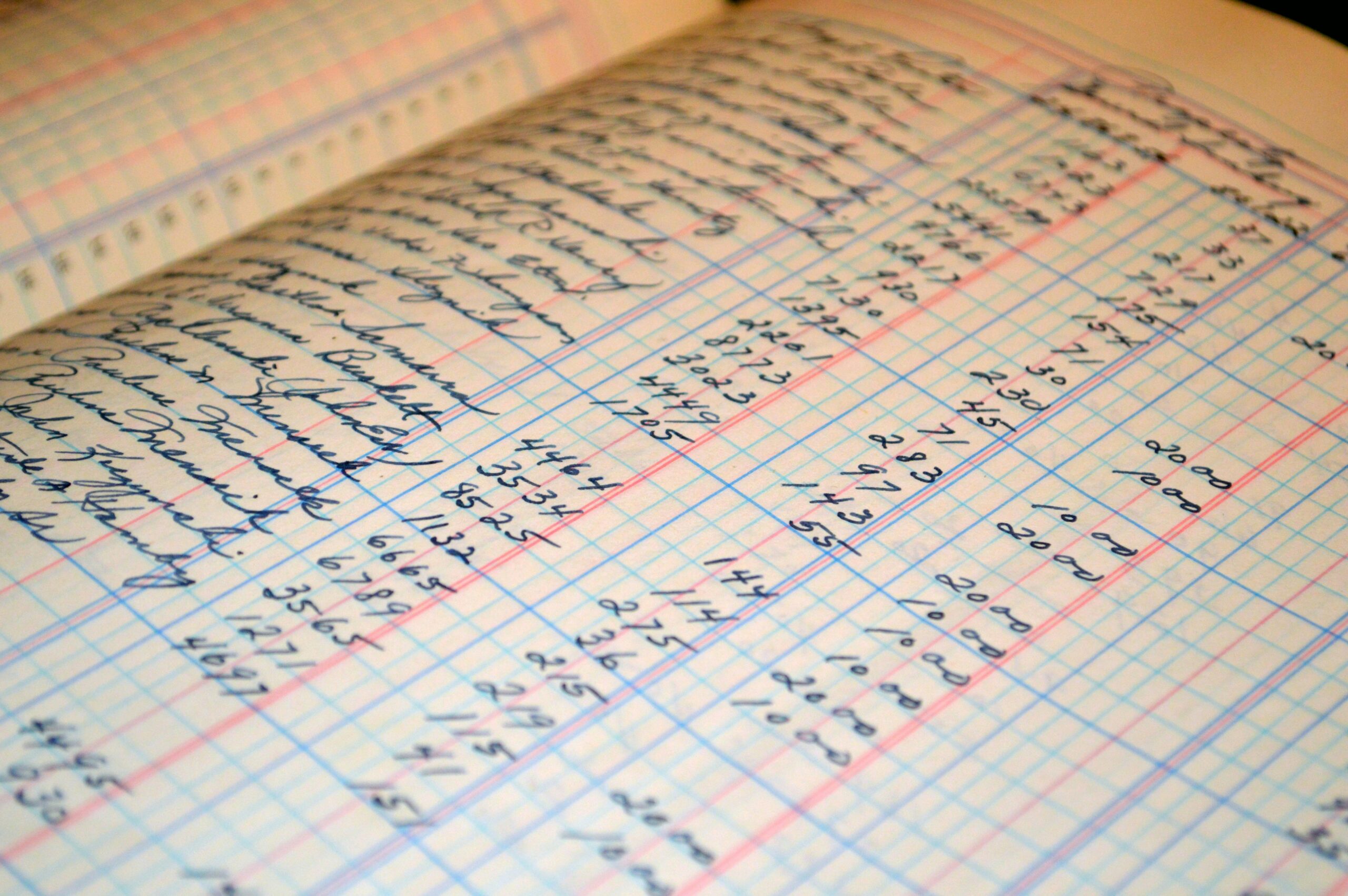

Data: The Essential Ingredient for LLM Development

At its core, training an LLM involves feeding it an immense volume of information. These models are designed to learn patterns, relationships, and nuances from the data they consume. However, a crucial distinction must be made: quantity without quality is a significant impediment to success. While large datasets are necessary, if that data is flawed, the model’s performance will inevitably suffer.

Consider this: if a learning system is exposed to inconsistent or inaccurate information, it will internalize those flaws. This can lead to reduced accuracy, biased predictions, and the phenomenon known as “model hallucinations,” where the AI generates plausible but incorrect information because its foundational knowledge is compromised.

The Risks of Subpar Data: Why Data Quality is Non-Negotiable for LLMs

The integrity of your training data directly impacts the reliability and ethical behavior of your LLM. Here are some common data quality challenges that can undermine AI initiatives:

- Sparse, Noisy, and Incomplete Data: Training an LLM with incomplete or inconsistent data can lead to inaccurate predictions. This isn’t just about missing data points; it can also result from overly aggressive data cleansing methods that inadvertently remove valuable context. The model may struggle to form a comprehensive understanding if its input data lacks critical information.

- Harmful, Biased, or Poisoned Data: If the data used to train an LLM contains inherent biases against certain groups, the AI is likely to produce discriminatory or untrustworthy decisions. This reflects the biases present in the training data, not an inherent flaw in the AI itself. Furthermore, if sensitive private information is present in the data, there’s a risk of accidental data leaks. A more insidious threat is “training data poisoning,” where malicious actors intentionally corrupt the data to damage the model or manipulate its decision-making processes. These issues carry serious real-world consequences.

- The Commoditization Challenge: As LLMs become more accessible, many organizations are adopting off-the-shelf solutions. However, the true competitive advantage in the AI era often lies not in the generic model, but in an organization’s ability to effectively manage and leverage its unique, proprietary internal data. Without robust data governance, this valuable asset may not be fully utilized, limiting differentiation.

Ensuring LLM Success: The Role of Data Governance

To navigate these challenges and unlock the full potential of LLMs, robust data governance is indispensable. It provides the structured framework of policies, processes, and responsibilities necessary to ensure data quality, security, and compliance throughout its lifecycle.

Data governance ensures that your data is accurate, consistent, and trustworthy from its inception. This includes:

- Automated Quality Validation: Implementing automated tools for continuous data quality validation is crucial. These tools can check for schema consistency, statistical anomalies, completeness, and duplicates, identifying and addressing errors early in the data pipeline.

- Active Learning: This approach allows the LLM to interactively query for new information, prioritizing the most valuable data for training. This optimizes the labeling process, focusing resources on data that will yield the greatest learning benefits.

- Continuous Improvement: Data environments are dynamic. Regularly updating models with fresh, high-quality data is essential to prevent performance degradation and ensure continuous improvement.

- AI Governance: Beyond data quality, AI governance encompasses the ethical and responsible use of AI. This includes establishing guidelines for bias detection, algorithmic transparency, and ensuring AI systems align with ethical standards and societal values.

The impact of strong data governance on AI initiatives is significant. Research indicates that AI and Machine Learning initiatives are 83% more likely to achieve their intended outcomes when built upon robust data governance foundations. This highlights that reliable data is the bedrock for advanced analytical capabilities and strategic foresight.

In conclusion, for organizations aiming to harness the transformative power of LLMs, prioritizing data quality and implementing comprehensive data governance is not merely a best practice; it is a strategic imperative. By ensuring that your data is a reliable and well-managed asset, you empower your AI initiatives to deliver accurate insights, mitigate risks, and drive genuine innovation.

Bibliography

- Ataccama. Top 5 Data Management Trends in 2025 and Tips on How to Maximize Their Value. https://www.ataccama.com/blog/top-5-data-management-trends-in-2025-and-tips-on-how-to-maximize-their-value

- Mixpanel. What is Data Governance?. https://mixpanel.com/blog/what-is-data-governance/

- InterVision. AI and Data Governance. https://intervision.com/blog-ai-and-data-governance/

- Secoda. Data Governance vs. Data Strategy. https://www.secoda.co/blog/data-governance-vs-data-strategy

- Number Analytics. 10 Data Governance Stats Revolutionizing Software & Tech. https://www.numberanalytics.com/blog/10-data-governance-stats-revolutionizing-software-tech

- Granica. Data Quality in Machine Learning GRC. https://granica.ai/blog/data-quality-in-machine-learning-grc

- Dataiku. AI Governance. https://www.dataiku.com/stories/detail/ai-governance/

- Profisee. Data Governance and Quality. https://profisee.com/blog/data-governance-and-quality/